Overflow of techniques

Overflow of techniques

Abstract

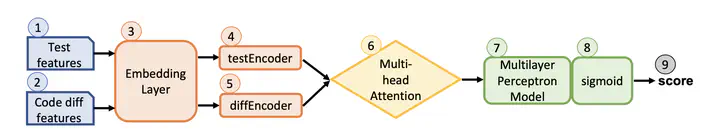

Regression testing—rerunning tests on each code version to detect newly-broken functionality—is important and widely practiced. But, regression testing is costly due to the large number of tests and the high frequency of code changes. Regression test selection (RTS) opti- mizes regression testing by only rerunning a subset of tests that can be affected by changes. Researchers showed that RTS based on pro- gram analysis can save substantial testing time for (medium-sized) open-source projects. Practitioners also showed that RTS based on machine learning (ML) works well on very large code repositories, e.g., in Facebook’s monorepository. We combine analysis-based RTS and ML-based RTS by using the latter to choose a subset of tests selected by the former. We first train several novel ML models to learn the impact of code changes on test outcomes using a train- ing dataset that we obtain via mutation analysis. Then, we evaluate the benefits of combining ML models with analysis-based RTS on 10 projects, compared with using each technique alone. Combining ML-based RTS with two analysis-based RTS techniques–Ekstazi and STARTS–selects 25.34% and 21.44% fewer tests, respectively.